python + opencvで個人認識 その3

前回、前々回で学習データの取得を行いました。実は前回、前々回の方法だと大量のデータを取ってくるのには向いていないんですよね。自画像の方はムービーにしてひたすら撮りまくれば増やせるんですが、スクレイピングの方が一度に20枚しか取れませんでした。google画像検索で何か対策されているっぽいんですよね。スクレイピングを改良してデータを増やすのもいいと思ったんですが、折角学習させるということで今回はデータを水増しさせます。

確かその1で水増しはしないと言っていましたが、あれは嘘です。なので今回はデータを水増しさせて、それで学習を行い自分の顔を認識できるのかを確認していきたいと思います。

今回水増し処理として、ガウス分布に基づくノイズ、ごま塩ノイズ、平滑化処理、アフィン変換を行いました。

水増しを行う処理はopencvで以下のように書きました。

import cv2 import numpy as np class DataPadder: def padding_gammmanoise(self, img, mean=0, sigma=15): src = img row,col,ch= src.shape gauss = np.random.normal(mean,sigma,(row,col,ch)) gauss = gauss.reshape(row,col,ch) gauss_img = src + gauss return gauss_img def padding_saltpappernoise(self, img, s_vs_p=0.5, amount=0.004): src = img row,col,ch = src.shape sp_img = src.copy() # 塩モード num_salt = np.ceil(amount * src.size * s_vs_p) coords = [np.random.randint(0, i-1 , int(num_salt)) for i in src.shape] sp_img[coords[:-1]] = (255,255,255) # 胡椒モード num_pepper = np.ceil(amount* src.size * (1. - s_vs_p)) coords = [np.random.randint(0, i-1 , int(num_pepper)) for i in src.shape] sp_img[coords[:-1]] = (0,0,0) return sp_img def padding_smoothing(self, img, mask): src = img blur_img = cv2.blur(src, mask) return blur_img def padding_affine(self, img, rotate, scale=1.0): src = img row,col,ch = src.shape sp_img = src.copy() center = (row/2,col/2) matrix = cv2.getRotationMatrix2D(center, rotate, scale) affine = cv2.warpAffine(sp_img, matrix, (row,col)) return affine

それでこれを用いて画像を水増しします。こんな風に

from datapadder import DataPadder from dataread import DataRead import cv2 import time dr = DataRead() padder = DataPadder() dr.write_pathlabel('dataset','path.txt') imgdatas = dr.read_imgdata('path.txt',(64,64),2) myface_img = [] face_img = [] rotates = [45,90,135,180,225,270,315] #ノイズ、ごま塩、回転(45,90,135,180,225,270,315) for img,label in imgdatas: if label[1] == 1: myface_img.append(padder.padding_gammmanoise(img)) myface_img.append(padder.padding_saltpappernoise(img)) myface_img.append(padder.padding_smoothing(img, (5,5))) for rotate in rotates: myface_img.append(padder.padding_affine(img, rotate)) else: face_img.append(padder.padding_gammmanoise(img)) face_img.append(padder.padding_saltpappernoise(img)) face_img.append(padder.padding_smoothing(img, (5,5))) for rotate in rotates: face_img.append(padder.padding_affine(img, rotate)) now = int(time.time()) for i,img in enumerate(myface_img): cv2.imwrite('paddimg/myface/' + str(now) + str(i) + '.png',img) for i,img in enumerate(face_img): cv2.imwrite('paddimg/face/' + str(now) + str(i) + '.png',img)

そうすると、こんな感じの画像たちから

こんなのができます。

いきなりインド人が現れていますが、写ってないだけで元の画像にちゃんといます。

さて、これでようやく学習を行うためのデータが集まりました。待ちに待った学習を行っていきます。

今回はTensorflowでCNNを学習させます。

二層のcnnと全結合層をtensorflowで実装しました。以下、cnnの実装です。

import tensorflow as tf import numpy as np class TensorCNN: def __init__(self, train_batch, test_batch, width, height): # Set model parameters self.trainbatch_size = train_batch self.testbatch_size = test_batch self.learning_rate = 0.01 self.image_width = width self.image_height = height self.target_size = 2 self.num_channels = 3 # greyscale = 1 channel self.conv1_features = 20 self.conv1_ksize = 4 self.conv2_features = 30 self.conv2_ksize = 4 self.max_pool_size1 = 2 # NxN window for 1st max pool layer self.max_pool_size2 = 2 # NxN window for 2nd max pool layer self.fully_connected_size1 = 100 def save_model(self,path): saver = tf.train.Saver() saver.save(self.sess, path) def load_model(self,path): saver = tf.train.Saver() saver.restore(self.sess,path) def net_init(self): self.sess = tf.Session() with tf.variable_scope("net") as scope: self.val_init() self.weight_init() self.model_init() self.loss_init() self.predict_init() self.optimizer_init() # Initialize Variables init = tf.global_variables_initializer() self.sess.run(init) def end(self): self.sess.close() def train_run(self,batch_x,batch_t): train_dict = {self.x_input:batch_x,self.y_target:batch_t,self.keep_prob:0.5} self.sess.run(self.train_step,feed_dict=train_dict) temp_train_loss, temp_train_preds = self.sess.run([self.loss, self.prediction], feed_dict=train_dict) temp_train_acc = self.get_accuracy(temp_train_preds, batch_t) return temp_train_acc,temp_train_loss def test_run(self,batch_testx,batch_testt): test_dict = {self.eval_input: batch_testx, self.eval_target: batch_testt,self.keep_prob:1.0} temptest_loss,temptest_preds = self.sess.run([self.test_loss,self.test_prediction], feed_dict=test_dict) temp_test_acc = self.get_accuracy(temptest_preds, batch_testt) return temp_test_acc,temptest_loss def val_init(self): x_input_shape = (self.trainbatch_size, self.image_width, self.image_height, self.num_channels) self.x_input = tf.placeholder(tf.float32, x_input_shape) self.y_target = tf.placeholder(tf.int32, (self.trainbatch_size,self.target_size)) eval_input_shape = (self.testbatch_size, self.image_width, self.image_height, self.num_channels) self.eval_input = tf.placeholder(tf.float32, shape=eval_input_shape) self.eval_target = tf.placeholder(tf.int32, shape=(self.testbatch_size, self.target_size)) predict_input_shape = (1, self.image_width, self.image_height, self.num_channels) self.predict_input = tf.placeholder(tf.float32, shape=predict_input_shape) self.predict_target = tf.placeholder(tf.int32, shape=(1,self.target_size)) def weight_init(self): # Convolutional layer variables conv1_initval = np.sqrt(1/self.image_height * self.image_width) self.conv1_weight = tf.Variable(tf.truncated_normal([ self.conv1_ksize,self.conv1_ksize, self.num_channels, self.conv1_features], stddev=conv1_initval, dtype=tf.float32)) self.conv1_bias = tf.Variable(tf.zeros( [self.conv1_features], dtype=tf.float32)) num_units = ((self.image_height - self.conv1_ksize)/2) ** 2 conv2_initval = np.sqrt(1/num_units) self.conv2_weight = tf.Variable(tf.truncated_normal( [self.conv2_ksize,self.conv2_ksize, self.conv1_features, self.conv2_features], stddev=conv2_initval, dtype=tf.float32)) self.conv2_bias = tf.Variable( tf.zeros([self.conv2_features], dtype=tf.float32)) # fully connected variables resulting_width = self.image_width // (self.max_pool_size1 + self.max_pool_size2) resulting_height = self.image_height // (self.max_pool_size1 + self.max_pool_size2) full1_input_size = resulting_width * resulting_height * self.conv2_features full1_initval = np.sqrt( 1/((full1_input_size)/2 + 1) ) self.full1_weight = tf.Variable(tf.truncated_normal( [full1_input_size, self.fully_connected_size1], stddev=full1_initval, dtype=tf.float32)) self.full1_bias = tf.Variable(tf.truncated_normal( [self.fully_connected_size1], stddev=0.1, dtype=tf.float32)) self.full2_weight = tf.Variable(tf.truncated_normal( [self.fully_connected_size1, self.target_size], stddev=np.sqrt(1/self.fully_connected_size1), dtype=tf.float32)) self.full2_bias = tf.Variable( tf.truncated_normal([self.target_size], stddev=0.1, dtype=tf.float32)) #dropout self.keep_prob = tf.placeholder(tf.float32) # Initialize Model Operations def my_conv_net(self,input_data): # First Conv-ReLU-MaxPool Layer conv1 = tf.nn.conv2d(input_data, self.conv1_weight, strides=[1, 1, 1, 1], padding='SAME') relu1 = tf.nn.relu(tf.nn.bias_add(conv1, self.conv1_bias)) max_pool1 = tf.nn.max_pool(relu1, ksize=[1, self.max_pool_size1, self.max_pool_size1, 1], strides=[1, 2, 2, 1], padding='SAME') # Second Conv-ReLU-MaxPool Layer conv2 = tf.nn.conv2d( max_pool1, self.conv2_weight, strides=[1, 1, 1, 1], padding='SAME') relu2 = tf.nn.relu(tf.nn.bias_add(conv2, self.conv2_bias)) max_pool2 = tf.nn.max_pool( relu2, ksize=[1, self.max_pool_size2, self.max_pool_size2, 1], strides=[1, 2, 2, 1], padding='SAME') self.max_pool2 = max_pool2 # Transform Output into a 1xN layer for next fully connected layer final_conv_shape = max_pool2.get_shape().as_list() final_shape = final_conv_shape[1] * final_conv_shape[2] * final_conv_shape[3] flat_output = tf.reshape(max_pool2, [final_conv_shape[0], final_shape]) # First Fully Connected Layer fully_connected1 = tf.nn.relu(tf.add(tf.matmul(flat_output, self.full1_weight), self.full1_bias)) fully_connected1 = tf.nn.dropout(fully_connected1,self.keep_prob) # Second Fully Connected Layer final_model_output = tf.add( tf.matmul(fully_connected1, self.full2_weight), self.full2_bias) self.fc2 = final_model_output return(final_model_output) def model_init(self): self.model_output = self.my_conv_net(self.x_input) self.test_model_output = self.my_conv_net(self.eval_input) self.predict_model_output = self.my_conv_net(self.predict_input) def loss_init(self): # Declare Loss Function (softmax cross entropy) self.loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=self.model_output, labels=self.y_target)) self.test_loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=self.test_model_output, labels=self.eval_target)) def predict_init(self): # Create a prediction function self.prediction = tf.nn.softmax(self.model_output) self.test_prediction = tf.nn.softmax(self.test_model_output) self.simple_predict = tf.nn.softmax(self.predict_model_output) # Create accuracy function def get_accuracy(self,logits, targets): batch_predictions = np.argmax(logits, axis=1) num_correct = np.sum(np.equal(batch_predictions, np.argmax(targets, axis=1))) return(100. * num_correct/batch_predictions.shape[0]) def optimizer_init(self): my_optimizer = tf.train.MomentumOptimizer(self.learning_rate, 0.9) self.train_step = my_optimizer.minimize(self.loss)

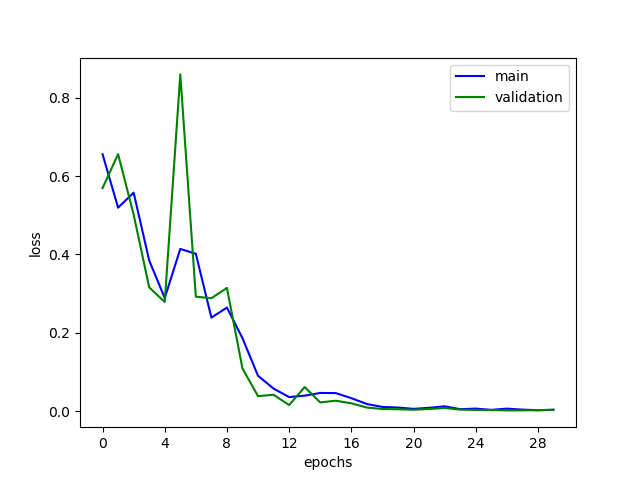

このcnnのクラスを用いて学習させた結果を下図に載せます。それぞれ、正答率と損失関数になります。

収束しているみたいです。ただ、元々の画像の量が少なかったので実際に使ってみて個人認識ができるのかどうか気になるところです。

一応学習させ、プロットしたときのコードを載せておきます。

import matplotlib.pyplot as plt import numpy as np from cnn import TensorCNN from dataread import DataRead IMG_HEIGHT = 64 IMG_WIDTH = 64 CH_NUM = 3 TARGET_SIZE = 2 TRAIN_BATCH = 100 TEST_BATCH = 90 EPOCHS = 30 dr = DataRead() dr.write_pathlabel('dataset','path.txt') dr.import_data('path.txt',(IMG_HEIGHT,IMG_WIDTH),TARGET_SIZE) data = dr.get_data() TRAIN_DATA_SIZE = int(len(data) * 0.8) TRAIN_DATA_SET = data[:TRAIN_DATA_SIZE] TEST_DATA_SET = data[TRAIN_DATA_SIZE:] cnn_net = TensorCNN(TRAIN_BATCH, TEST_BATCH, IMG_WIDTH, IMG_HEIGHT) cnn_net.net_init() def deivide_datasets(data_sets): data_set = np.array(data_sets) img_data_set = data_set[:int(len(data_set)), :1].flatten() label_data_set = data_set[:int(len(data_set)), 1:].flatten() image_ndarray = np.empty((0, IMG_HEIGHT, IMG_WIDTH, CH_NUM)) label_ndarray = np.empty((0,TARGET_SIZE)) for (img, label) in zip(img_data_set, label_data_set) : image_ndarray = np.append(image_ndarray, np.reshape(img, [1, IMG_HEIGHT, IMG_WIDTH, CH_NUM]), axis=0) label_ndarray = np.append(label_ndarray, np.reshape(label, (1, TARGET_SIZE)), axis=0) return image_ndarray, label_ndarray def get_batch_dataset(img_dataset, label_dataset, indexes): img_len = IMG_HEIGHT * IMG_WIDTH * CH_NUM img_ndarray = np.empty((0, IMG_HEIGHT, IMG_WIDTH, CH_NUM)) label_ndarray = np.empty((0, TARGET_SIZE)) for index in indexes: img_ndarray = np.append(img_ndarray, np.reshape(img_dataset[index], [1, IMG_HEIGHT, IMG_WIDTH, CH_NUM]), axis=0) label_ndarray = np.append(label_ndarray, np.reshape(label_dataset[index], [1,TARGET_SIZE]), axis=0) return img_ndarray, label_ndarray train_imgdata, train_label = deivide_datasets(TRAIN_DATA_SET) test_imgdata, test_label = deivide_datasets(TEST_DATA_SET) epochlogs = [] for i in range(EPOCHS): epochres = [] #ミニバッチ学習 train_perm = np.random.permutation(len(train_imgdata)) test_perm = np.random.permutation(len(test_imgdata)) train_accuracy = 0 train_loss = 0 test_accuracy = 0 test_loss_ = 0 cnt = 0 for j in range(0,(len(train_imgdata) - TRAIN_BATCH),TRAIN_BATCH): batch_x, batch_t = get_batch_dataset(train_imgdata, train_label, train_perm[j:j+TRAIN_BATCH]) temp_train_acc,temp_train_loss = cnn_net.train_run(batch_x,batch_t) train_loss += temp_train_loss train_accuracy += temp_train_acc cnt += 1 print('%d epoch Accuracy = %.2f%% Loss = %.3e'%(i,train_accuracy/cnt,train_loss/cnt)) epochres.append(train_accuracy/cnt) epochres.append(train_loss/cnt) cnt = 0 for j in range(0,len(test_imgdata) - TEST_BATCH,TEST_BATCH): batch_testx, batch_testt = get_batch_dataset(test_imgdata, test_label, test_perm[j:j+TEST_BATCH]) temp_test_acc,temp_test_loss = cnn_net.test_run(batch_testx,batch_testt) test_loss_ += temp_test_loss test_accuracy += temp_test_acc cnt += 1 print('%d epoch TestAccuracy = %.2f%% TestLoss = %.3e'%(i,test_accuracy/cnt,test_loss_/cnt)) epochres.append(test_accuracy/cnt) epochres.append(test_loss_/cnt) epochlogs.append(epochres) #cnn_net.save_model('models/') import matplotlib.ticker as ticker trainresult = np.array(epochlogs) x_epochs = np.array(np.arange(len(epochlogs))) plt.plot(x_epochs,trainresult[:,0],label='main',color='b') plt.plot(x_epochs,trainresult[:,2],label='validation',color='g') plt.ylabel('accuracy[%]') plt.xlabel('epochs') plt.gca().xaxis.set_major_locator(ticker.MaxNLocator(integer=True)) plt.legend() plt.show() plt.plot(trainresult[:,1],label='main',color='b') plt.plot(trainresult[:,3],label='validation',color='g') plt.ylabel('loss') plt.xlabel('epochs') plt.gca().xaxis.set_major_locator(ticker.MaxNLocator(integer=True)) plt.legend() plt.show()

次回は保存した学習モデルを使って、カメラの映像から個人認識をしてみたいと思います。